About V2X-Sim

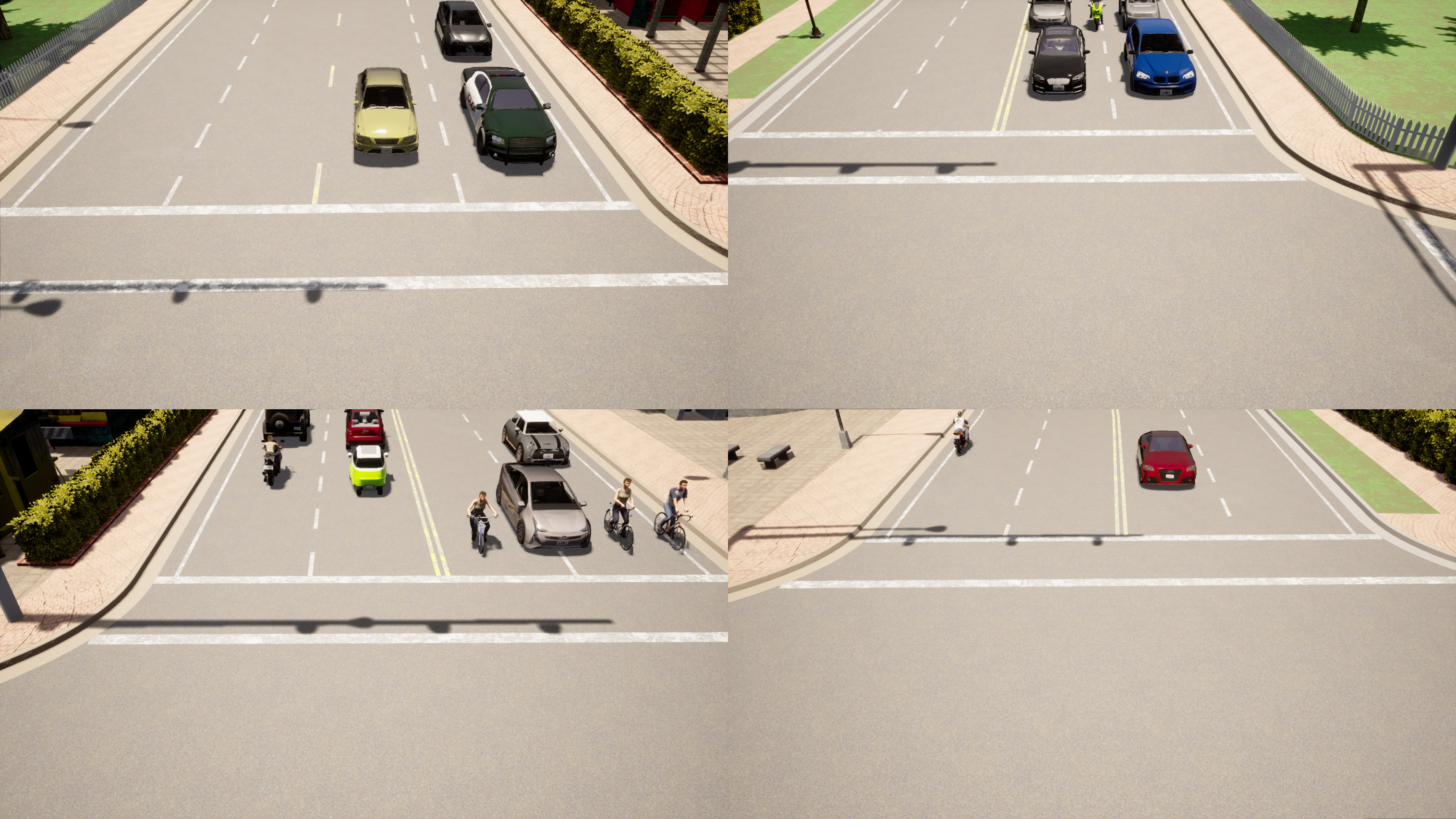

V2X-Sim, short for vehicle-to-everything simulation, is the first synthetic V2X-aided collaborative

perception dataset in autonomous driving developed by AI4CE Lab at NYU and MediaBrain Group at SJTU to stimulate

multi-agent multi-modality multi-task perception research. Due to the immaturity of V2X and the cost of simultaneously

operating multiple autonomous vehicles, it is very expensive and laborious to build such a real-world dataset for

research communities. Therefore, we use highly realistic CARLA-SUMO co-simulation to ensure the representativeness

of our dataset compared to real-world driving scenarios.

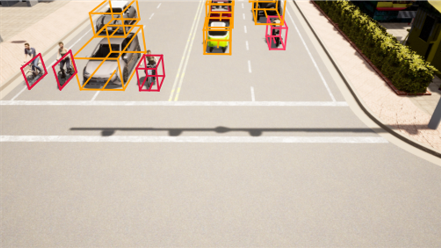

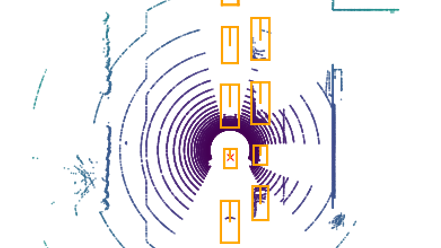

V2X-Sim provides: (1) well-synchronized sensor recordings from road-side unit (RSU) and multiple vehicles that enable

multi-agent perception, (2) multi-modality sensor streams that facilitate multi-modality perception, and (3) diverse

well-annotated ground truths that support various perception tasks including detection, tracking, and segmentation.

Meanwhile, we build an open-source testbed and provide a benchmark for the state-of-the-art collaborative perception

algorithms on three tasks, including detection, tracking and segmentation.